The free, OpenAI, Anthropic alternative. Your All-in-One Complete AI Stack - Run powerful language models, autonomous agents, and document intelligence locally on your hardware.

No cloud, no limits, no compromise.

Drop-in replacement for OpenAI API - modular suite of tools that work seamlessly together or independently.

Start with LocalAI’s OpenAI-compatible API, extend with LocalAGI’s autonomous agents, and enhance with LocalRecall’s semantic search - all running locally on your hardware.

Open Source MIT Licensed.

Why choose LocalAI?

OpenAI API Compatible - Run AI models locally with our modular ecosystem. From language models to autonomous agents and semantic search, build your complete AI stack without the cloud.

LLM Inferencing

LocalAI is a free, Open Source OpenAI alternative. Run LLMs, generate images, audio and more locally with consumer grade hardware.

Agentic-first

Extend LocalAI with LocalAGI, an autonomous AI agent platform that runs locally, no coding required. Build and deploy autonomous agents with ease. Interact with REST APIs or use the WebUI.

Memory and Knowledge base

Extend LocalAI with LocalRecall, A local rest api for semantic search and memory management. Perfect for AI applications.

OpenAI Compatible

Drop-in replacement for OpenAI API. Compatible with existing applications and libraries.

No GPU Required

Run on consumer grade hardware. No need for expensive GPUs or cloud services.

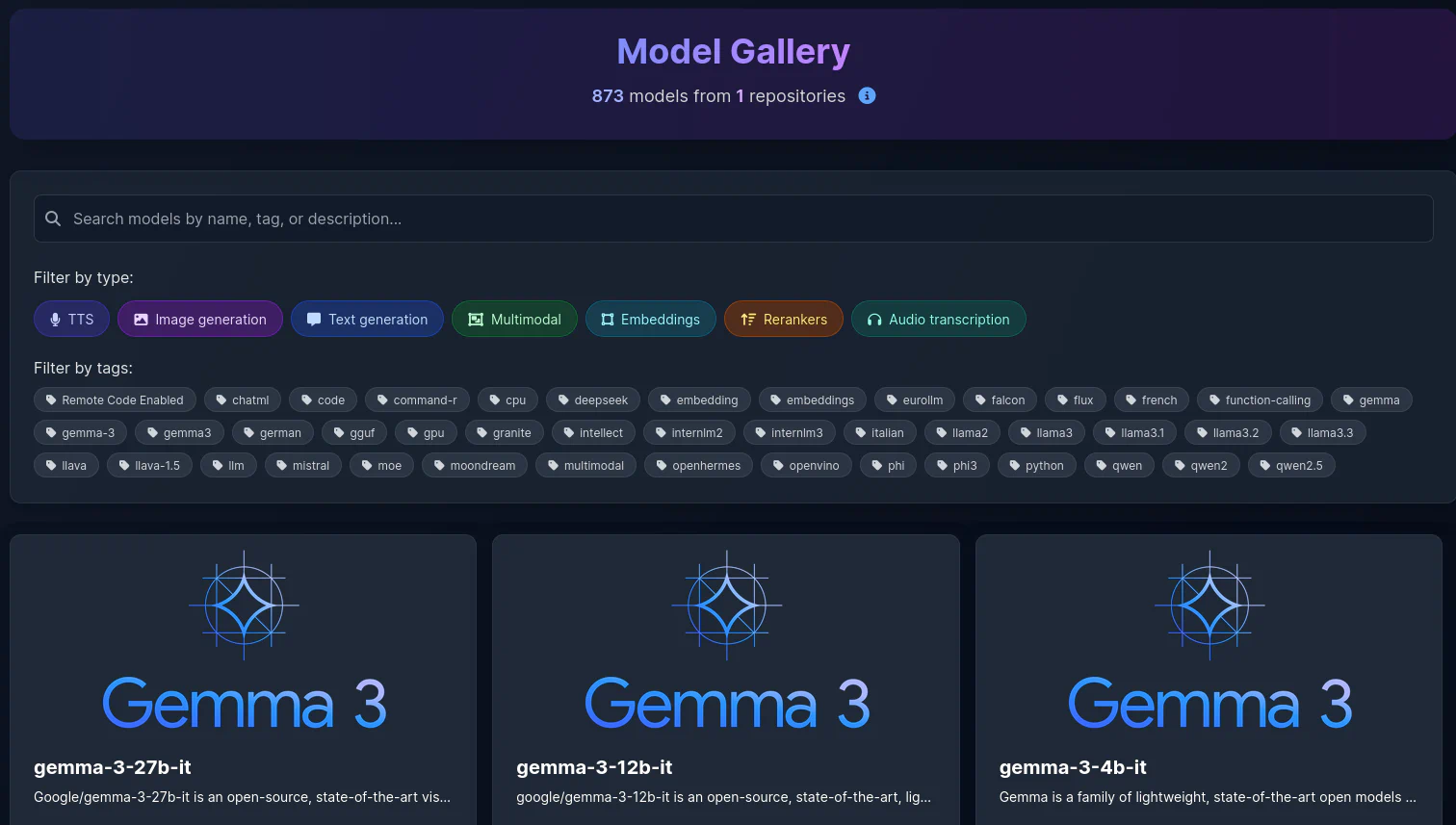

Multiple Models

Support for various model families including LLMs, image generation, and audio models. Supports multiple backends for inferencing, including vLLM, llama.cpp, and more. You can switch between them as needed and install them from the Web interface or the CLI.

Privacy Focused

Keep your data local. No data leaves your machine, ensuring complete privacy.

Easy Setup

Simple installation and configuration. Get started in minutes with Binaries installation, Docker, Podman, Kubernetes or local installation.

Community Driven

Active community support and regular updates. Contribute and help shape the future of LocalAI.

Extensible

Easy to extend and customize. Add new models and features as needed.

Peer 2 Peer

LocalAI is designed to be a decentralized LLM inference, powered by a peer-to-peer system based on libp2p. It is designed to be used in a local or remote network, and is compatible with any LLM model. It works both in federated mode or by splitting models weights.

Open Source

MIT licensed. Free to use, modify, and distribute. Community contributions welcome.

Run AI models locally with ease

LocalAI makes it simple to run various AI models on your own hardware. From text generation to image creation, autonomous agents to semantic search - all orchestrated through a unified API.

- apiOpenAI API compatibility

- hubMultiple model support

- imageImage understanding

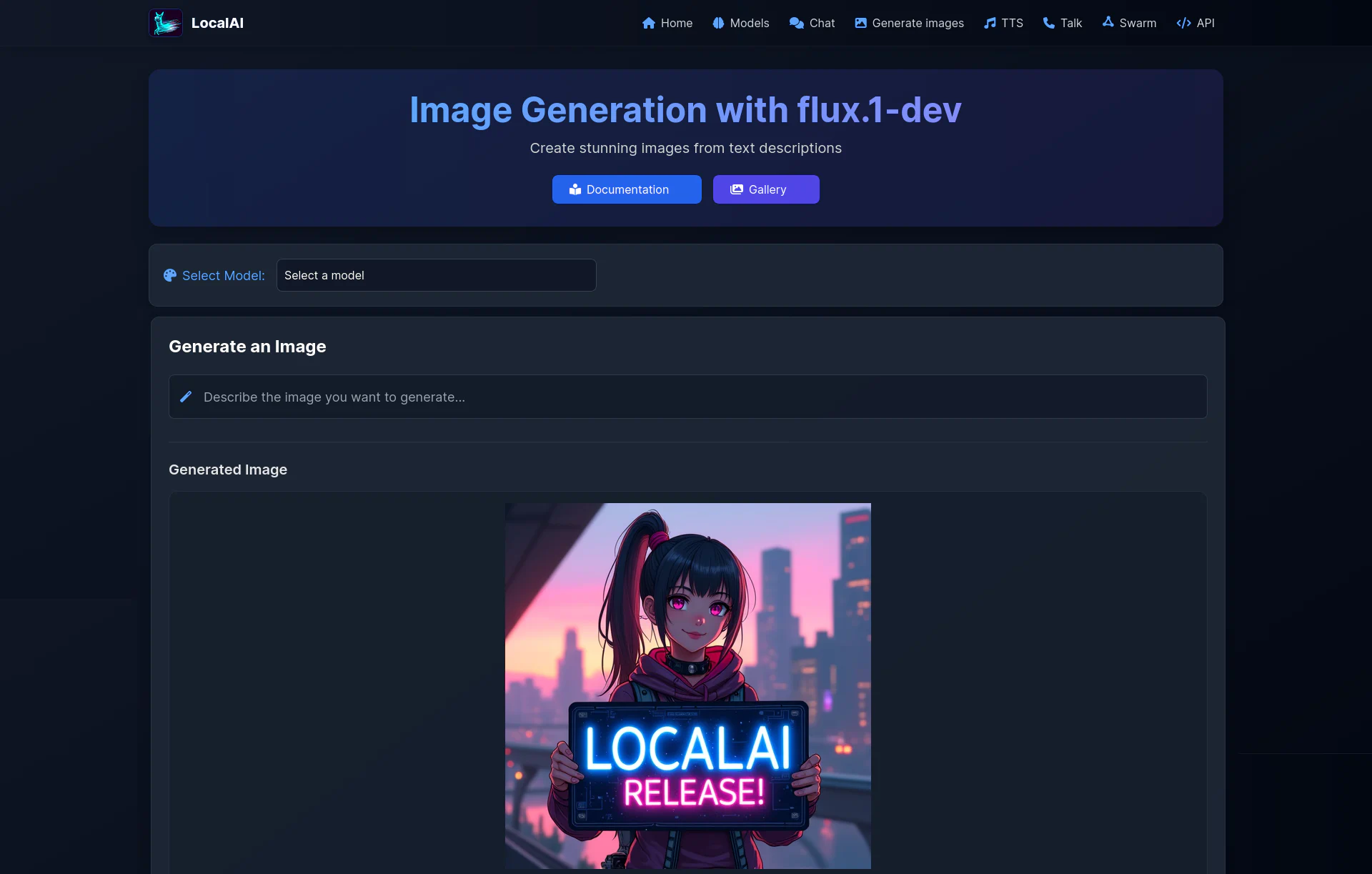

- imageImage generation

- music_noteAudio generation

- micVoice activity detection

- micSpeech recognition

- movieVideo generation

- securityPrivacy focused

- smart_toyAutonomous agents with LocalAGI

- psychologySemantic search with LocalRecall

- hubAgent orchestration